The Problems With AI Generated Answers or Blog Posts

There are some significant problems with AI generated content and answers provided online and we’re only just starting to realise

Last Updated: September 22nd, 2023

By: Steve

Contents

I’ve been involved in the blog space now for somewhere close to 20 years. I’ve never made much money on it – though I’ve made some. I’m no guru on anything, especially how to use a blog to make money online. But that’s not the point of this post. I keep my eye on the trends online and AI is the biggest trend of them all lately. And I see “make money online” gurus recommending AI tools for creating blog posts all the time. It sounds like a wonderful idea. But significant problems with AI for the overall outlook for human knowledge.

AI Makes Stuff Up

Let’s be fair, the title ‘AI’ is nonsense. These things are actually ‘Large Language Models’ (LLMs). There’s little intelligence in them. They’re impressive, very impressive and I don’t want to take away from that. But they’re NOT intelligent. They have no clue whether their writings are founded in reality or not. In this sense, they literally make stuff up.

This is a well known problem and is called hallucination. The system hallucinates the answer.

The problem though is that those who know about these LLMs know this – but the general public don’t. The programming is impressive but all it’s doing is working out the likelihood of the words that might come next in a sentence based on what subject has been asked of it. So it strings words together in a way that make it look intelligent. Emphasis on looking intelligent.

Bing Provides Fake References

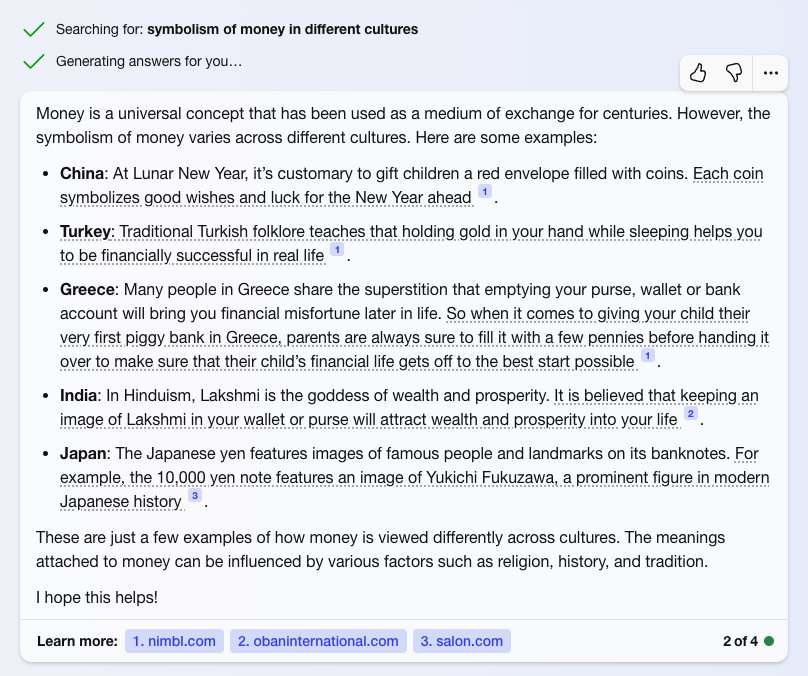

Bing uses ChatGPT to be ‘helpful’. And in many ways it is helpful. But it’s still not always factual. Worse than that though is that Bing now references where it gets its information from using in text citation methods.

That should be a good thing. It lets people click on the reference to go to the source document and see for themselves. Which is exactly what I did recently as you can see in the screenshot below;

At first glance this looks fantastic. Bing has answered my question in depth and has linked to the sources it found the facts on. And actually, further research (using Google’s standard search methods this time) do, in this case, back up those facts.

But the sites Bing Chat has chosen as the references do not contain any information about those facts. ChatGPT has hallucinated them as references. If you search for ‘lakshmi’ on the page cited in reference 2 the word doesn’t appear at all. Nor is there anything at all about keeping pictures in your wallet. The same is true if you search for Fukuzawa cited in reference 3.

It’s like Dave down the pub (who’s actually called Rodney of course) saying “Well, I heard on the News that…” when in fact he didn’t hear it on Newsnight, he heard it on “Not the 9’oclock News” and it was a parody.

Bing Chat Does Get It Right Sometimes Though

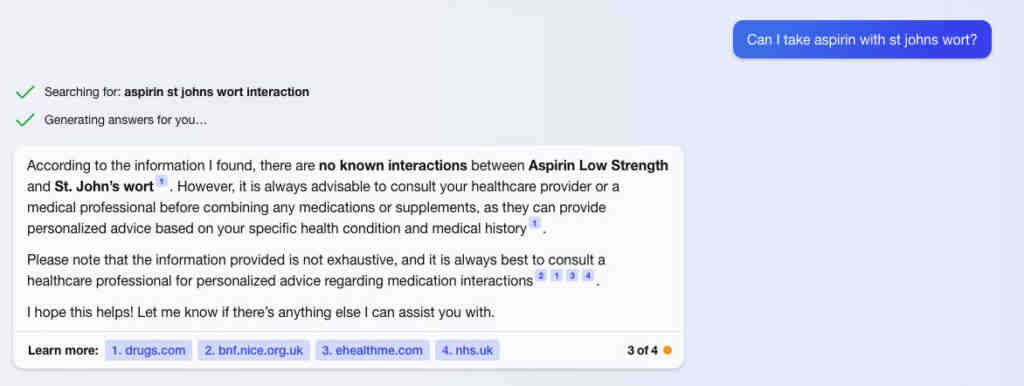

I tried Bing GPT with some queries regarding Aspirin and St John’s Wort as well as Warfarin and St John’s Wort. To be fair to it, on this occasion it mostly got it right and it also pointed the references to the right place too.

The answer on this example isn’t horrendous even if it is vague. Perhaps if you don’t check the sources that it’s recommended you check then you might end up missing the cautions that are associated with St John’s Wort and aspirin though. But, at least on this occasion, the references it provides do talk about the exact subject matter and aren’t hallucinated.

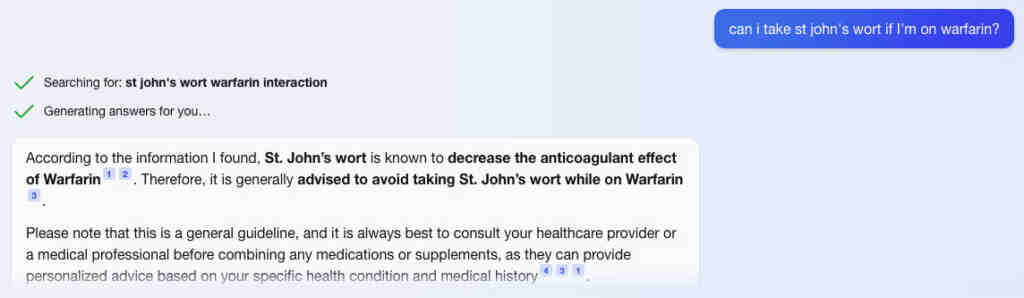

An even better result was experienced when I asked Bing about St John’s Wort with Warfarin, whereupon it advised against it and cited correct sources who confirmed the result.

Why This Matters

Bing and Google are considered as sources of Authorative information by many. I would argue that of course they’re not and their traditional approach of providing only links to other sites helps to see that more clearly. When Bing and Google provide the answer directly to you, they appear to be more authorative than they are.

Coupled with the lay person’s understanding of the tool that’s being used as being ‘Artificially Intelligent’ they’re overplaying the accuracy of the response that’s being given. And in the case of Bing with its referencing of where it found the information, they’re definitely trying to look authorative.

This is OK, indeed, it’s great but only if the quoted sources actually do talk about what has been referenced. Because people will take Bing’s word for it that the information was found on that site and that gives the answer credibility that it doesn’t necessarily deserve.

In my first example, none of the answers provided are actually life changing if the source isn’t actually the one saying what it’s saying. But in the second and third examples (which Bing did get right on this occasion) they very well could be life threatening.

False Information Becomes a Circular Problem

Now admittedly the very small sample size I have used here has provided information that’s actually correct (even the money example is correct, it’s the references that aren’t). But many people providing information in the current age are doing so without fact checking. The “make money online” gurus are actively encouraging the use of AI tools to create articles that give information about topics the new authors know little to nothing about. And many of these new authors don’t know enough about a subject to be able to accurately fact check – especially as the hallucination problem becomes more deeply embedded online.

When Bing Chat, or any AI tool provides references which add weight to the credibility of the text, this text then is likely to get included in a post (and cited) without it being checked. And then, in a few months time, Bing will potentially use that new article as a source of authority on a topic – and reference that new (unfact checked) post. When a person decides to fact check the information Bing has given them now, they’ll see the topic is talked about on that page and will assume that it’s right. Bing referenced it, and the content backs that up. But that content was potentially produced from a hallucination.

Article Writers / Journalists Please Fact-Check!

I’m seeing posts on prominent and historically authorative sources now being written by AI. Reputable (allegedly) sites are using AI to produce content that’s way outside of their lane and Google is ranking it highly because of the Expertise, Experience and Authority of the website when the post itself has no expertise, experience or authority.

An example of this is at HowStuffWorks.com – https://science.howstuffworks.com/science-vs-myth/extrasensory-perceptions/1212-angel-number-meaning.htm – in the science category.

Scientifically it’s complete and utter bollocks. And it’s written by AI Technology – they state so. Howstuffworks.com is, according to ConsumerAction.org “a source for clear, unbiased and reliable explanations of how things work”.

So you could come away from that site, having done your research and been told that it’s likely true. Nothing in the post tells you it’s not. Nothing in the post tells you that Numerology, or Angels, or any of that post are pseudoscience. (This is a relatively outrageous example because most people would know that it’s pseudoscience – but what about subjects where that’s not so easy to spot?).

Now, I don’t mind a bit of belief in the unknown, quite the reverse, I’m aware that science doesn’t have all the answers. But from a fact check perspective this post, on a science site, is nonsense and should never have been written.

And were it not for AI generated content, I doubt it would have been written because it’s so far out of their lane that someone would have hopefully decided it didn’t fit the bill when it comes to the overall brand and wasn’t worth the effort. But this is low effort journalism now.

Information Online May Become Less Able to Be Relied Upon

As the circular fact checking problem becomes more embedded in information available online, the harder it becomes to accurately fact check. And when previously reputable sites begin using this information, which they’ve fact checked using this hallucinated data, the deeper the problem becomes rooted.

And the internet as a source of science and knowledge becomes more and more diluted and less and less useful. The problem needs to be addressed now before this happens.

If you’re writing factual posts (as opposed to editorial opinion like this post), whether it be for your new fancy blog that you hope will make you thousands a month, or for a currently reputable online brand, it behooves you to diligently and accurately fact check your information. And do it in a way that ensures you’re not getting snared by information that has already been hallucinated by a LLM who is artificial but definitely not intelligent. As time goes by, this is likely to get harder and harder – so let’s all start now and try to keep this internet as accurate as possible.

If you’ve found this post helpful please feel free to share it using the buttons below. If you have any questions, comments or feedback we’d love to hear from you by leaving a comment using the form below.

Thanks for reading!